An Introduction to the Hardware of IBM Spectrum Fusion HCI

IBM created Spectrum Fusion to address the data challenges that organizations are experiencing getting applications out of pilot and into production. To make this possible, Spectrum Fusion enables applications to access any data anywhere and protects application data for ensuring application high availability. Clients want to protect application data, ensure application availability, and access any data, anywhere. Clients want those capabilities to be simple and consistent. They need to operate, look, and feel the same. This is what Spectrum Fusion delivers.

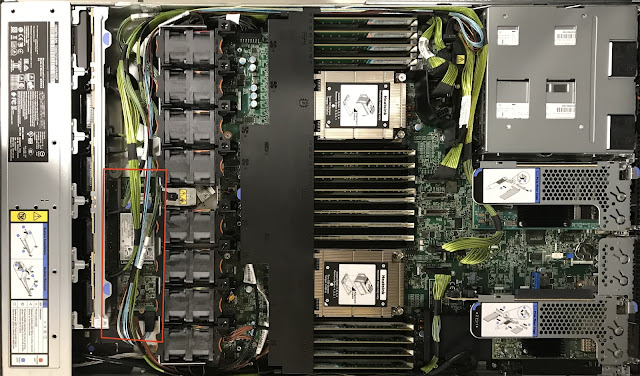

This article focuses on the design of the Spectrum Fusion HCI integrated hardware and software offering. Spectrum Fusion HCI was designed to be simple to deploy, simple to operate, and simple to scale up with increased demand. It was designed to be resilient to failure by utilizing redundant components. And it was designed to provide a high level of performance by using high-speed network connections and optional GPU acceleration.

Simple to Deploy, Operate and Scale

A fully configured hardware offering means clients do not have to spend time specifying and procuring hardware that is guaranteed to work together and which can be used to efficiently support the software stack. The Spectrum Fusion HCI product team has already done the work of choosing the components that go together and are well suited for running the OpenShift software stack and supporting the Spectrum storage services. The principal components of Spectrum Fusion HCI are:

- Storage servers populated with NVMe drives to provide computing power and storage, each with 32 CPU cores and 256GB of RAM

- Application servers without NVMe drives to boost the computing power without adding storage, each with 32 CPU cores and 256GB of RAM

- GPU servers equipped with NVIDIA A100 GPU PCIe adapter cards for handing AI workloads

- A pair of 100Gb high-speed Ethernet switches for handling application and storage traffic

- Intelligent power distribution units (PDUs)

- A pair of 1Gb management Ethernet switches to provide out-of-band management of the servers, switches, and PDUs

- A 42U rack enclosure

Advantages of an integrated hardware and software offering come from the ways that these two complementary pieces of the offering work together. The system management software was developed with the knowledge of what components can be included in the offering, and so the software was written to optimally configure the components and take advantage of their strengths. The system management software also monitors the system to make sure all components continue to function properly and will use the Call Home feature to alert IBM support of any hardware failure or serious error that it detects. And when the time comes to apply firmware updates to the system, it is the integrated management software that is used to orchestrate the updates.

The base configuration of Spectrum Fusion HCI consists of six servers with storage plus the two management switches and the two high-speed switches. Scaling up the computing power of the system by adding servers is simple because all the rails, cables, and power connections that might ever be needed are already built into the system. Scaling up storage capacity by adding drives is simple because the NVMe drives are hot-pluggable into the front slots on the servers and require no downtime to be added.

Resilient to Failure

Spectrum Fusion HCI has been designed to eliminate single points of failure, and it does this by including redundant hardware for each of its critical components. Redundancy not only protects against the failure of a component, it also makes it possible to update component firmware without downtime by taking components offline one at a time to be updated and restarted.

Both the high-speed switches and the management switches are configured in pairs with MLAG (Multi-Chassis Link Aggregation Protocol) such that if one of the switches in the pair fails, the other switch can take over and maintain all the active connections. LACP (Link Aggregation Control Protocol) is used so that each connection to the pairs of switches is configured to use two cables, one to each switch. If there is a problem with one of the cables or ports, then the other cable or port will be able to keep the connection active.

Every server and switch has two power supplies, and these power supplies are connected to different PDUs. There are a total of six PDUs in the system: four in the side pockets of the rack, and another pair that is horizontally mounted in the rack. If a PDU fails, a power supply fails, or a power cord is accidentally disconnected, the component will still have a second power source and will be able to continue running.

The cluster of storage servers is configured to store data across the storage cluster using 4+2P erasure coding. In this configuration, the failure of two storage servers can be tolerated without any loss of data. The OS boot drives used in all the Spectrum Fusion HCI servers are all configured in redundant pairs using RAID 1 hardware storage controllers so that if one of the two OS boot drives fails the system can continue running with just one.

Providing a High Level of Performance

The storage servers are the basic building blocks of Spectrum Fusion HCI. Each system has a base configuration of six storage servers that are combined together to create the system's storage cluster. Each of the servers has a minimum of two NVMe storage drives that can be increased up to a maximum of ten storage drives on each server. For clients running AI applications, Spectrum Fusion HCI can be configured with an optional pair of GPU servers. These are 2U servers with 48 CPU cores, 512GB of RAM, and three NVIDIA A100 GPU 40GB PCIe adapter cards, giving a total of six A100 GPUs.

Spectrum Fusion HCI has a high-speed network for use by the storage cluster and by applications. The high-speed network is built around a pair of 32-port, 100Gb Ethernet switches. All of the storage servers, application servers, and GPU servers have a 2-port, 100Gb Ethernet adapter. One port on this adapter is connected to the first high-speed switch and the second port is connected to the second high-speed switch. These 100GbE connections are reserved for use by the Spectrum Scale ECE storage cluster. All of these same servers also have a 2-port, 25Gb Ethernet adapter. Using breakout cables that split the 100GbE ports on the switch into four 25GbE ports, one port on the server’s 25 GbE network adapter is connected to the first high-speed switch and the second port is connected to the second high-speed switch.

A Spectrum Fusion HCI system connects to the client's data center network through the high-speed switches. Two 100GbE ports on each switch are reserved for this purpose. Connections between the system and the data center switches must be configured for LACP using pairs of cables that connect to the same port numbers on the two high-speed switches.

Comments

Post a Comment