Support for AI and ML Apps in IBM Spectrum Fusion HCI

CPU and Memory

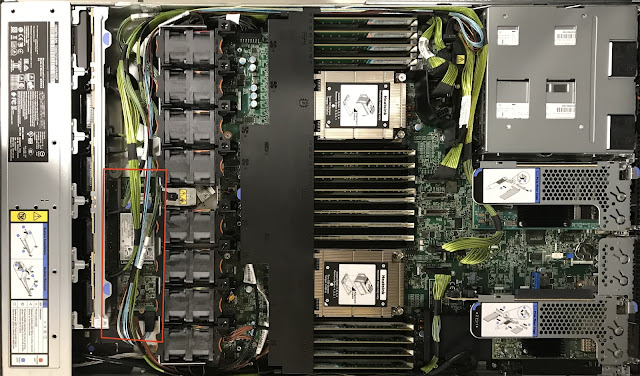

A Spectrum Fusion HCI GPU server is built from a Lenovo SR665 server that is two rack units (2U) high. (A rack unit is an industry standard that translates to 1.75 inches; an industry standard data center rack used to hold servers and switches is 42U tall.) Inside the server are two processor sockets that are each populated with an AMD EPYC 7F72 processor. The EPYC 7F72 is a 24-core, 48-thread, processor that operates with a base clock speed of 3.2 GHz. With two of these processors, the server has a total of 48 cores and 96 threads available for running applications on Red Hat OpenShift.

The AMD 7F72 processor is part of the AMD EYPC 2 processor family, sometimes referred to as the "Rome" family. There are many features of EPYC 2, but one of the most important is its support of PCIe Gen4. At a high level, PCIe is a technology that is used to connect together the processor, storage drives, network interface cards, and other peripheral devices within a server. Version 4 of PCIe is twice as fast as PCIe 3 and so it represents a major improvement in system performance.Each AMD 7F72 processor has eight memory channels for a total of 16 memory channels. The SR665 system board has slots for placing two DIMMs on each memory channel meaning that the server can have a total of 32 DIMMs installed. For best performance, AMD recommends that all memory channels be populated so that each channel has equal capacity. For that reason, the memory configuration of a GPU server uses 16 DIMMs to populate each memory channel with one DIMM and leaves the other 16 memory slots on the system board empty. Each of the 16 DIMMs has a capacity of 32GB for a total RAM amount of 512GB. That translates to a bit more than 10GB of RAM per processor core.

GPU Adapters

The star components of the GPU server, of course, are the GPU adapters. Each GPU server has three NVIDIA A100 40GB PCIe 4 GPU adapters. These large full-height, full-length, dual-slot adapters are the reason why the GPU server is a 2U server. They simply don't fit in a 1U server. These GPU adapters can consume a large amount of power, and for that reason, the GPU servers have 1800W (230V) power supplies.

The A100 GPU is the top-end of the NVIDIA "Ampere" family of GPUs and provides significant performance improvements over preceding generations of NVIDIA GPUs. NVIDIA A100 Tensor Core technology supports multiple math precisions to accelerate every workload. The A100 has Multi-instance GPU (MIG) flexibility and can be partitioned into seven GPU instances, meaning that a single Spectrum Fusion HCI GPU server can provide 21 A100 instances to accelerate AI and machine learning workloads running in Red Hat OpenShift. Each instance is fully isolated at the hardware level with its own memory, cache, and compute cores.

Storage

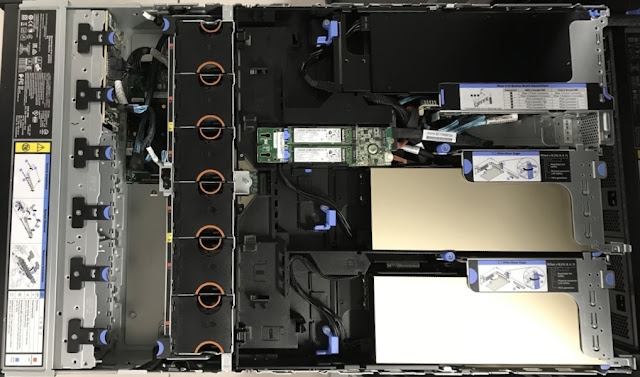

Every GPU server has a pair of 3.2TB SAS 12Gb SSDs. These SSDs are U.2 form factor drives that plug into the slots on the front of the GPU server. These drives are hot-swappable, meaning that the drives can be added to and removed from the drive bays without having to first power down the server. This pair of 3.2TB drives provides local storage for applications that run on the GPU servers and are not part of the storage cluster of the Spectrum Fusion HCI system.

All of the GPU servers also have a pair of 960GB M.2 SATA SSDs onto which the Red Hat OpenShift CoreOS operating system is installed. The two M.2 form factor drives are connected to a RAID controller and configured for RAID 1 (mirroring) to provide redundancy. Should one of these M.2 operating system drives fail, the system can continue to function.

Network

Each GPU server has two NVIDIA/Mellanox dual-port network interface cards (NICs). A dual-port ConnectX-6 100GbE NIC is used to provide high-performance connectivity to the system's storage network so that the large volumes of data that are typically processed using GPUs can be moved quickly from the storage cluster of Spectrum Fusion HCI. The ConnectX-6 NIC supports PCIe 4 and exploits the speed of PCIe 4 for quickly moving storage data to the GPUs from the NIC.

Connections to the system's application network are provided by a dual-port ConnectX-4 25GbE NIC. Having physically separate NICs for the storage and application networks provides isolation and reduces the possibility of application traffic interfering with traffic on the storage network. Each server is connected to the management network using both a built-in Ethernet port on the system board and a Broadcom OCP Ethernet adapter.

As you can tell from the above description, the GPU server of Spectrum Fusion HCI has been designed to provide high-performance GPU support for accelerating AI and machine learning applications running in Red Hat OpenShift. In my next post, I will take close look at the other optional server, the AFM server, that can be added to Spectrum Fusion HCI to give a boost to distributed storage networking.

Previous post: Inside the Storage/Compute Servers of IBM Spectrum Fusion HCI

Comments

Post a Comment